on

Secure doesn't mean anything.

No, seriously. I’m not trying to be ironic because the title of my blog is “Brindle on Security”, which I should probably change to something more creative anyway.

During my tenure at Gentoo, running the Hardened Gentoo project, the most common question by far was “How do I secure my system?” Warning, this article may contain some flamebait, avoid it if you can’t resist flaming back ![]() .

.

Eventually we gave up and just pointed people to websites, perhaps this post can serve as that page. The answer was “What do you mean ‘secure’ your system?” Security isn’t, and can’t be, a goal by itself. You need to know what exactly you are trying to protect yourself against, your threat model, as it were.

SELinux has the ability to protect against many different threat models. In Hardened Gentoo we also had some complimentary projects that protected against things that SELinux couldn’t, such as PaX (kernel level memory execution protection and memory layout randomization) and SSP (userspace level stack smashing protection). Fedora also has comparable technologies such as Exec Shield baked in by default.

So I saw a thread recently that said something to the concern of “Why use SELinux, which is security bolted onto Linux when you can use OpenBSD which has security as part of the development process?” It’s an interesting question I suppose, if you can decode it. The OpenBSD mantra seems to be develop software correctly in the first place and you don’t need additional layers of security. The baffling part is that you’ll rarely find an OpenBSD user that actually knows what security OpenBSD actually, tangibly provides.

All that said, there are different kinds of security that you might want to implement, and lots of different solutions to attain them.

System Integrity

System integrity is what most people mean when they say “secure my system”. Do you want system integrity? The answer is almost always yes. Some people want very good system integrity and end up using a read only medium, like a livecd or dvd, to run their systems. This, of course, only works if you aren’t storing important data on those systems, for things like web front ends to applications servers it might work fine.

System integrity is what OpenBSD users mean when they say they have a secure system. They integrate quality control into their development process to ensure that as few bugs as possible get into their code and the result is suppose to be high system integrity.

Quality control alone doesn’t give you complete system integrity, however. Your kernel can be well coded but that doesn’t mean much if other processes running as root are vulnerable. Just take a look at ps and see how many applications are running as root. If any of those services are vulnerable your system may have less integrity than previously thought. The fact is everyone runs third party apps on their UNIX/Linux systems. Most users run Apache, samba, syslog, etc and all have components running as root. Even OpenBSD isn’t without vulnerabilities, quality control only goes so far, after that more is necessary.

Memory protection techniques can help protect against some of these vulnerabilities. NX support on modern processors prevent some exploits that use bugs to add shellcode to running applications but that won’t prevent someone from using code already present in the address space, such as system(). Memory layout randomization only provides stop gap protection since the layout can eventually be brute forced, if the administrator isn’t watching very closely.

Mandatory access control is a way of addressing the gaps between a well coded kernel and vulnerable third party applications. If Apache shouldn’t be able to write to anything in /usr then MAC can prevent that. This, by itself, is a huge win for system integrity since it is generally thought that /usr is the highest integrity part of the system.

Now for some explanation. On most systems you can think of integrity as either low or high. High integrity files are files that are system objects. Things in /usr are pretty much all system objects. If a system object gets changed the behavior of the system is changed. A rootkit is going to target these high integrity files, for example. Other examples of high integrity objects are, ofcourse, anything in /boot, /lib, /sbin, /bin, most files in /etc, and so on. User files, on the other hand, are generally low integrity files. Anything in /home is untrusted. User data should never be able to affect the integrity of the system.

The integrity of a process is determined simply by where it gets its input. If a process reads user data, or data off of an untrusted network it is considered low integrity. Apache, which reads /home/*/public_html and receives queries from the internet, is low integrity. RPM, which is run by the administrator, reads rpm’s downloaded from your vendor (hopefully) and installs files all over the system, is high integrity (this is an assessment of how it operates, not whether it is free of vulnerabilities).

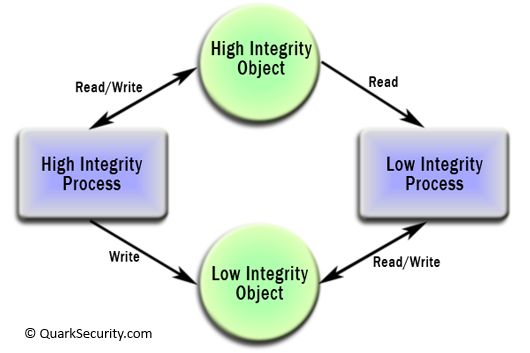

Now that you know how to determine the integrity level of objects and processes lets talk about the security policy to maintain system integrity. Biba is a hierarchical integrity policy, similar to Bell LaPadula but, interestingly, the exact opposite. It allows processes to both read and write to objects of the same integrity, no surprises there. Next it allows high integrity processes to write to low integrity objects, but not read them and last it allows low integrity processes to read high integrity objects but not write them.

Though the use of Biba is very limited and has never hit a mainstream operating system it is a very good practice, if implemented in a usable way. The standard SELinux policies implement something between Biba and ‘least privilege’, with a nice balance to ensure system integrity without making the system completely unusable. Biba, for example, isn’t very flexible. Since processes and objects are simply labeled with their integrity there is no way to make practical changes to the policy. You either fall within the constraints of Biba or you are entirely MAC exempt.

The SELinux Apache policy doesn’t allow Apache to write to the high integrity files we talked about above (/usr, /lib, /bin, etc) while allowing it to write to its logs, its cache, etc. Since objects in SELinux have fine grained labeling we can restrict access to apache high integrity objects, such as apache modules, the apache configuration and so on. In many ways SELinux lets us constrain applications more than Biba while at the same time making practical exceptions when necessary. This brings me to my next kind of security.

Application and Data Integrity

While system integrity is generally of the utmost importance, since it is the basis on which you can build other security goals, in some cases it is not enough. Sometimes the most important part of a system is the applications it is running, or the data those applications manage.

For example, if you are running a database server the most important part of the system quite possibly could be the data in that database. How do you protect that data?

We can go back to the mantra of well coded systems with quality control but that, once again, fails when you consider other applications running on the system. Granted you’ll need to trust the database server itself with the data, there is no way around that but the other applications that can access the data can be problematic. Generally database files would be owned by a non-root user with the correct permissions set to prevent other applications from accessing them without going through the database server. Of course root applications can bypass all those restrictions.

There are multiple ways to protect the data. One way is run only the database server on the system and nothing else. That way there is nothing else to protect the data from. This can work in well controlled environments but there are always going to be apps running, like the system logger and sometimes, particularly on UNIX like systems, there is a tendency to put multiple applications on a single system. There will always be the need for backup apps, log monitors, log rotators, etc. This may be a risk you are willing to take, and that is what threat modeling is all about. If you can accept the risk then your security may already meet your needs.

Another way people have tried recently is with hypervisors. Unfortunately the current generation of hypervisors always require a domain 0 or host OS that essentially has full access to the disk, the network interfaces, sometimes the memory, etc. Protecting the integrity of applications within a VM is impossible without first doing so on the host OS, as I pointed out in my Blu-ray post.

MAC systems are IMHO a superior way to handle application and data integrity. SELinux policies can prevent anyone from accessing the database files aside from the database server itself. You may need the backup service to read the database files directly (unless it is configured to get backups directly from the database server itself) but you can easily make the backup server only able to read files without being able to write them, which is not something you can easily do without MAC.

Pipelines

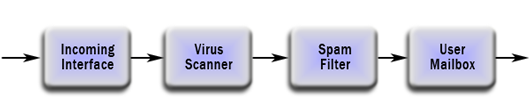

Another security goal which is often overlooked outside of a small niche of guard architects are pipelines. Guards are systems with specific purposes to transfer data from one side to another, while going through a specific set of applications. In the real world one can envision a proxy server or a virus scanning email server as a guard. The idea is that data coming into a system must go through some number of applications before it is allowed to proceed.

In this security goal it is desired that any incoming email goes through a virus and spam filter before going on to the user mail directory and ultimately onto the end users system. This may be more of a functional goal than a security goal to some. As a former administrator I understand the security ramifications of unfiltered email getting through to the network. This kind of goal is generally enforced with configuration of the mail system but for some people that is not enough.

Pipelines obviously rely on system integrity to protect the layer below the pipeline itself, so those security goals are composed onto one another. An opensource project that my company developed called CDS Framework implements this sort of security goal.

Conclusion

There are other security goals one can implement, these are only examples. The first two are probably the most important for most users. The main thing I want people who read this to take away is that there is no such thing as a ‘secure system’ or ‘added on security’ or ‘baked in security’. Security by itself doesn’t mean anything. Users need to determine their threat model and security goals in order to determine what measures need to be taken in order to protect what they want protected.